Advanced Data engineering training and placement

Train with a Founder & Industry Expert of 12+ Years Experience.

Secure Your Dream Job in Just 4 Months!

Top Rated in 2024

Book a Free Live Demo

92% students got placed, It's your turn, register now.

Course curriculum

- Conditional statements (if, elif, else)

- Loops (for, while)

- Control flow tools (break, continue, pass)

- Lesson 4: Lists and Tuples.

- Creating and using lists

- Understanding tuples and their uses

- Creating and using dictionaries

- Dictionary methods and operations

- Understanding sets and their uses

- String operations and methods

- String formatting

- Working with regular expressions

- Lesson 7: Functions

- Defining and calling functions

- Function arguments and return values

- Scope and lifetime of variables

- Lambda functions

- Importing modules

- Standard library overview

- Creating and using packages

- Managing dependencies with pip

- Lesson 9: Classes and Objects

- Introduction to OOP

- Creating classes and objects

- Instance variables and methods

- Inheritance and polymorphism

- Encapsulation and abstraction

- Magic methods and operator overloading

- Lesson 11: File Handling

- Reading from and writing to files

- Working with file paths

- Using context managers

- Understanding exceptions

- Try, except, else, and finally blocks

- Creating custom exceptions

- Lesson 13: JSON and CSV

- Reading and writing JSON data

- Working with CSV files

- Parsing and processing data

- Lesson 14: Understanding Comprehensions

- List comprehensions

- Dictionary comprehensions

- Set comprehensions

- Nested comprehensions

- Conditional comprehensions

- Comparing comprehensions with loops

- Best practices and common pitfalls

- Lesson 17: Iterator Protocol

- Understanding iter () and next ()

- Built-in iterators in Python

- Implementing your own iterator classes

- Use cases for custom iterators

- count(), cycle(), chain(),

- Combining iterators with comprehensions

- Lesson 20 : Introduction to Generators

- Understanding yield keyword

- Generator functions regular functions

- Syntax and use cases

- Comparison with list comprehensions

- Chaining generators

- Generators for data streaming and processing

- Lesson 23: Basics of Regular Expressions

- Introduction to regex syntax

- Using the re module in Python

- Matching and searching

- Grouping and capturing

- Replacing and splitting text

- Lookahead and lookbehind assertions

- Non-capturing groups

- Practical examples in data validation and parsing

- Lesson 26: Introduction to Datetime Module

- Understanding datetime, date, time, and timedelta

- Creating and formatting dates and times

- Adding and subtracting dates and times

- Comparing dates and times

- Working with pytz module

- Converting between time zones

Lesson 29: Web Scraping

- Introduction to web scrapings

Using libraries like Beautiful Soup and Scrapy- Parsing HTML and XML

- Module 1: Introduction to SQL and Databases

- Lesson 1: Overview of Databases

- Understanding Databases: Types and Uses

- Relational Databases vs. NoSQL Databases

- Introduction to SQL (Structured Query Language)

- Installing and Setting Up a SQL Database (SQL SERVER)

- Using SQL Interfaces (SSMS STUDIO)

- Connecting to a Database

- Lesson 1: Introduction to SQL Syntax

- Basic SQL Commands: SELECT, FROM,

- Filtering Data with WHERE Clauses

- SQL Syntax Rules and Best Practices

- Selecting Specific Columns

- Using Aliases for Columns and Tables

- Sorting Data with ORDER BY

- Using Comparison Operators

- Using Logical Operators (AND, OR, NOT)

- Handling NULL Values

- Lesson 1: Aggregate Functions

- Introduction to Aggregate Functions: COUNT, SUM, AVG, MAX, MIN

- Combining Aggregate Functions with GROUP BY

- Filtering Grouped Data with HAVING

- Understanding GROUP BY Clause

- Grouping by Multiple Columns

- Using ROLLUP and CUBE for Advanced Grouping

- Lesson 1: Understanding Join

- Introduction to Joins: INNER JOIN, LEFT JOIN, RIGHT JOIN, FULL JOIN

- Joining Multiple Tables

- Aliasing Tables in Joins

- Self Joins

- Cross Joins

- Using Subqueries with Joins

- Lesson 1: Subqueries and Nested Queries

- Writing Subqueries in SELECT, FROM, and WHERE Clauses

- Correlated Subqueries

- Using Subqueries for Data Analysis

- Introduction to Window Functions

- Using ROW_NUMBER, RANK, and DENSE_RANK

- Applying PARTITION BY and ORDER BY in Window Functions

- Introduction to CTEs

- Writing Recursive CTEs

- Using CTEs for Complex Queries

- Lesson 1: Inserting Data

- Basic INSERT Statements

- Inserting Multiple Rows

- Using SELECT for Inserting Data

- Basic UPDATE Statements

- Using Subqueries in UPDATE

- DELETE Statements and Safe Deletion Practices

- Module 1: Introduction to NoSQL and MongoDB

- NoSQL database overview

- Installing and setting up MongoDB

- Basic CRUD operations (Create, Read, Update, Delete)

- MongoDB data modeling and schema design

- Aggregation framework and pipeline

- Indexing for performance optimization

- Working with geospatial data

- Backup and restore strategies

- Using PyMongo to interact with MongoDB in Python

- Data analysis with MongoDB and Pandas

- Visualizing MongoDB data with popular libraries

- Case studies and real-world applications

- Module 1: Introduction to Big Data and PySpark

- Understanding Big Data concepts

- Setting up PySpark environment

- Basics of RDDs (Resilient Distributed Datasets)

- Transformations and Actions in PySpark

- Introduction to DataFrames

- Performing SQL operations on DataFrames

- Data manipulation and cleaning with PySpark

- Working with different file formats (CSV, JSON, Parquet)

- Machine learning with PySpark MLlib

- Performance tuning and optimization

- Handling large-scale data processing

- Real-world project: End-to-end data pipeline with PySpark

- Module 1: Introduction to NumPy

- Lesson 1: Getting Started with NumPy

- What is NumPy and why use it?

- Installing NumPy

- Importing NumPy

- Basic operations with NumPy

- Understanding NumPy arrays

- Creating arrays from lists and tuples

- Using built-in NumPy functions to create arrays (arange, zeros, ones, full, linspace, eye)

- Array attributes (shape, size, dtype, ndim)

- Reshaping arrays

- Indexing and slicing arrays

- Array broadcasting

- Lesson 3: Operations on Arrays

- Arithmetic operations

- Universal functions (ufuncs)

- Aggregate functions (sum, mean, std, var, min, max)

- Boolean operations and masking

- Sorting arrays

- Unique elements

- Basic linear algebra with NumPy

- Matrix operations (dot product, cross product)

- Solving linear equations

- Eigenvalues and eigenvectors

- Matrix decomposition (LU, QR, SVD)

- Lesson 5: Structured Arrays and Record Arrays

- Understanding structured arrays

- Creating and manipulating structured arrays

- Record arrays and their use cases

- Field access and modification

- Reading data from files (text, CSV)

- Writing arrays to files

- Handling large datasets with memory mapping

- Saving and loading NumPy objects with save, np.load, np.savez

- Lesson 7: Broadcasting and Vectorization

- Deep dive into broadcasting rules

- Vectorized operations for performance

- Using vectorize for vectorization

- Module 1: Introduction to Pandas

- Understanding Pandas and its role in data science

- Installation and setup

- Series: Creation, manipulation, and operations

- DataFrame: Creation, manipulation, and operations

- Indexing and selecting data

- Handling missing data

- Data alignment

- Merging, joining, and concatenating data

- GroupBy operations

- Pivot tables and cross-tabulations

- Handling duplicates

- Data transformation

- String operations

- Reading and writing data from/to different file formats (CSV, Excel, SQL, )

- Date and time data types and tools

- Time series basics

- Resampling and frequency conversion

- Window functions

- Performance improvement using categorical data and memory optimization

- Module 1: Introduction to GitHub

- Overview of Version Control Systems

- Setting up Git and GitHub accounts

- Basic Git commands (clone, commit, push, pull)

- Creating and managing repositories

- Branching and merging strategies

- Pull requests and code reviews

- Managing issues and milestones

- Best practices for collaborative projects

- Module 1: Getting Started with VSCode

- Installing and configuring VSCode

- Key features and extensions for data science

- Customizing the editor for efficiency

- Integrated terminal and version control

- Module 1: Introduction to Jupyter Notebooks

- Installing Jupyter Notebook

- Notebook interface and basic features

- Markdown and code cells

- Creating and organizing notebooks

- Importing and exploring data with Pandas

- Data visualization with Matplotlib and Seaborn

- Interactive widgets with ipywidgets

- Sharing notebooks with JupyterHub and nbviewer

Frequently Asked Questions

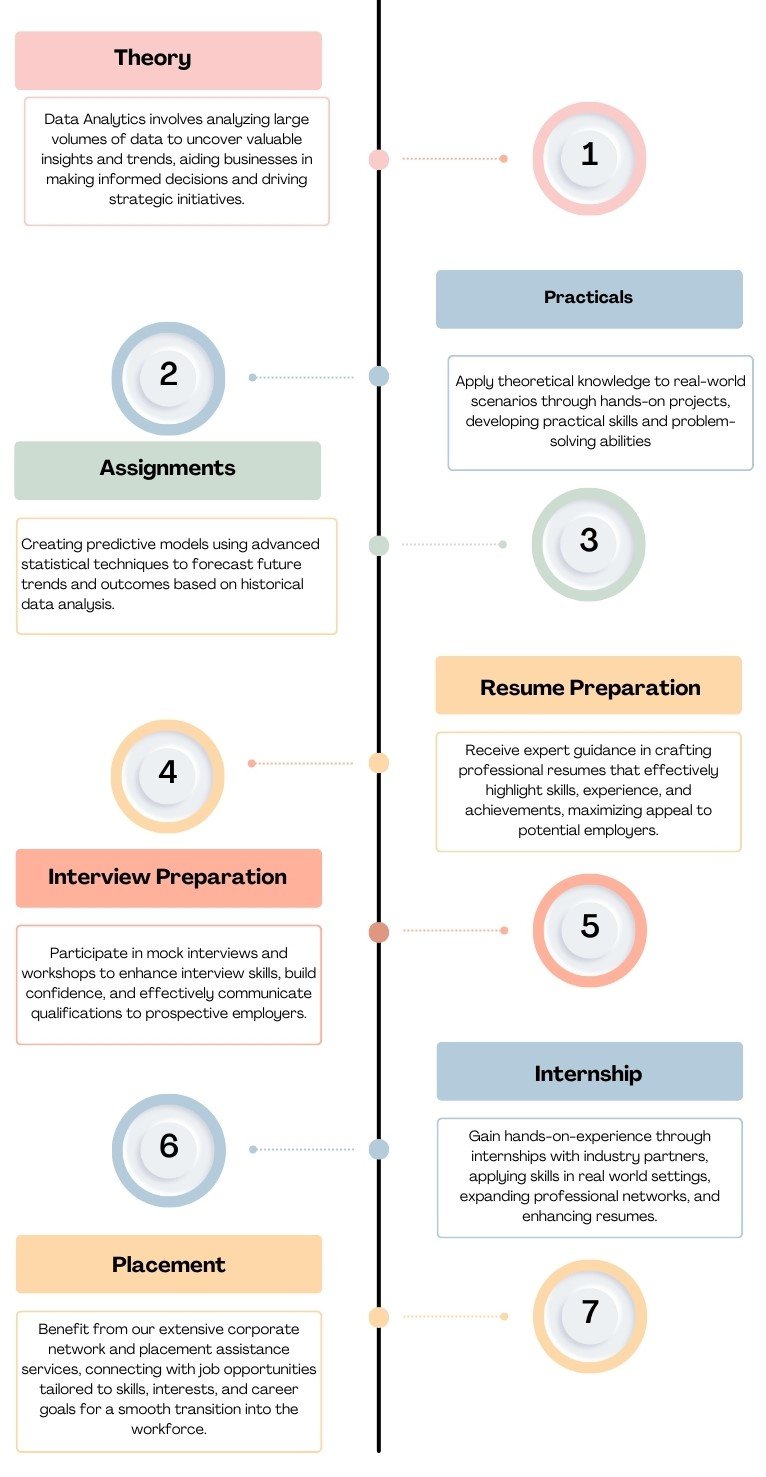

4 months, inclusive of projects and portfolio development.

Basic knowledge of statistics and programming is helpful but not required.

Data Analytics Certification upon successful completion.

Yes, including resume building, interview preparation, and job placement assistance.

Flexible options available for both live and online learning in Hyderabad.

- Download Broucher

- Whatsaap

- Call Now

Testimonials

Mastering Data Science with Syntax Minds

Embarking on the data science course at Syntax Minds in Hyderabad truly

transformed my career trajectory. The curriculum’s depth, covering everything from

machine learning to statistical analysis, paired with hands-on projects led by

industry experts, was exactly what I needed to advance my skills. The real-world

applications I learned here have been instrumental in my professional success. For

anyone serious about a career in data science, Syntax Minds is your launchpad!

Venkat. S

Data Scientist at DataWise Analytics, Bengaluru.

From Beginner to Data Science Expert

As someone who started with a basic understanding of data analysis, the data

science course at Syntax Minds was a revelation. It not only deepened my technical

skills but also enhanced my analytical thinking. The personalized guidance and

practical approach provided in Hyderabad’s engaging learning environment

exceeded all my expectations. I strongly recommend Syntax Minds to anyone

aspiring to break into the data science field!

Madhukar.G

Data Analyst at NextGen Insights Pvt. Ltd, Mumbai.

Unparalleled Learning Experience in Data Science

Syntax Minds offers an unmatched learning journey in data science. Their

curriculum is at the forefront of current industry standards, focusing on both theory

and practical application. What distinguishes them is their dedication to student

success, offering extensive resources, modern tools, and mentorship. Post-

graduation, I have a comprehensive portfolio demonstrating my skills in predictive

modeling, data analysis, and machine learning, making me a sought-after

professional in the job market. A heartfelt thank you to Syntax Minds for guiding my

career to new heights!.

Joanne Ellis

Machine Learning Engineer at AI Innovations, New Delhi.

Career Transformation through Syntax Minds' Data Science Course

Opting for Syntax Minds’ data science training in Hyderabad was the best decision

for my professional development. The course not only covered key areas like big

data analytics, machine learning, and data. visualization but also taught me to approach problems strategically. The community support and networking

opportunities have been tremendously beneficial. Since completing the course, I’ve

been able to lead data-driven projects with confidence and have experienced

remarkable career growth. Syntax Minds is at the cutting edge of data science

education.