Table of Contents

ToggleFull Stack Data Scientist

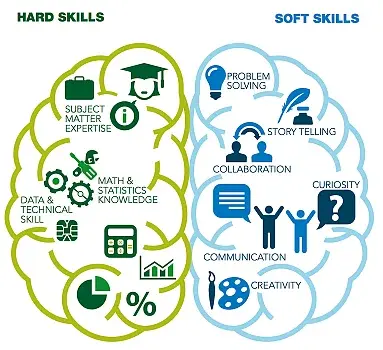

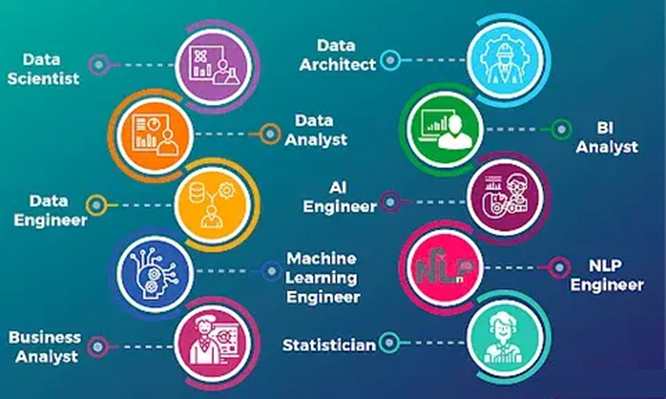

Becoming a Full Stack Data Scientist involves mastering a wide range of skills across data science, data engineering, machine learning, and software engineering. A “Full Stack” Data Scientist can handle everything from data collection and cleaning to building and deploying machine learning models and working with production-level systems. Here’s a roadmap to become a Full Stack Data Scientist:

Master Core Data Science Skills

Start by mastering the fundamental skills required for data analysis and machine learning:

- Data Analysis & Visualization:

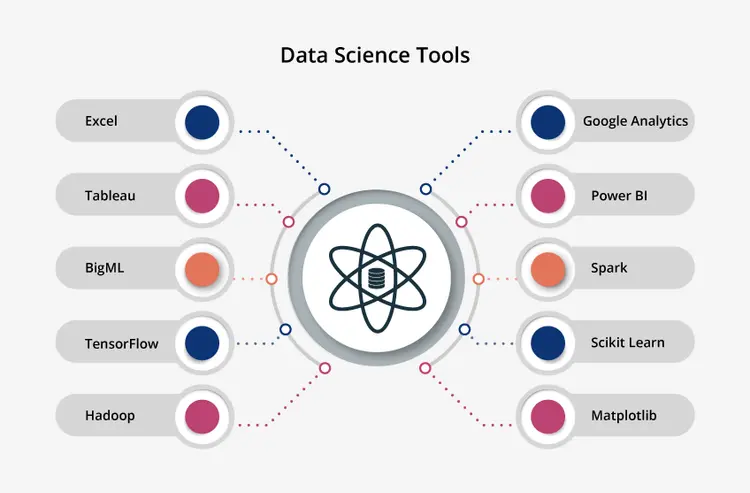

- Tools/Technologies: Python (Pandas, NumPy), R, Excel

- Skills:

- Data wrangling and cleaning (handling missing values, outliers, etc.)

- Exploratory Data Analysis (EDA)

- Data visualization (using tools like Matplotlib, Seaborn, or Plotly in Python)

- Machine Learning:

- Tools/Technologies: Scikit-learn, TensorFlow, PyTorch, XGBoost, LightGBM

- Skills:

- Supervised and unsupervised learning algorithms (linear regression, decision trees, clustering, etc.)

- Model selection, evaluation, and tuning (cross-validation, grid search, etc.)

- Feature engineering and scaling

- Understanding of overfitting, bias-variance tradeoff

- Statistical Analysis:

- Skills:

- Probability, distributions, hypothesis testing

- A/B testing, confidence intervals

- Statistical methods to interpret data patterns

- Communication:

- Skills:

- Communicating results through data stories (presentations, dashboards)

- Writing clear reports and documenting your analysis and findings

- Translating technical findings into actionable business insights

Learn Data Engineering

A Full Stack Data Scientist needs to understand how to handle large datasets and make them ready for analysis or modeling.

- Data Collection & Storage:

- Tools/Technologies: SQL, NoSQL (MongoDB, Cassandra), AWS, Azure, Google Cloud

- Skills:

- Writing SQL queries for relational databases

- Understanding data pipelines and ETL (Extract, Transform, Load) processes

- Working with cloud-based data storage solutions (S3, Google Cloud Storage)

- Data Processing & Pipelines:

- Tools/Technologies: Apache Spark, Apache Kafka, Airflow

- Skills:

- Building scalable data pipelines for processing large volumes of data

- Data streaming and batch processing concepts

- Automating workflows and scheduling jobs

- Big Data:

- Tools/Technologies: Hadoop, Spark

- Skills:

- Distributed computing concepts

- Processing big data using Hadoop or Spark

Learn Software Engineering for Data Science

Data scientists need to be proficient in writing efficient, maintainable, and scalable code. This is where “Full Stack” really comes in.

- Programming Skills:

- Languages: Python, Java, Scala, SQL, Bash

- Skills:

- Writing clean, modular code

- Version control (Git/GitHub/GitLab)

- Working with APIs and RESTful services

- Software Design:

- Skills:

- Object-oriented programming (OOP) and design patterns

- Testing (unit testing, integration testing)

- Code optimization and profiling

- Frameworks & Libraries:

- Tools/Technologies: Flask/Django (for web APIs), Docker (containerization), Kubernetes (for scaling)

- Skills:

- Building APIs to serve models (Flask or FastAPI)

- Deploying and managing containerized applications (Docker)

- Container orchestration with Kubernetes

- Building scalable architectures

Learn Deployment & Model Serving

A Full Stack Data Scientist should know how to take a model from development to production.

- Model Deployment:

- Tools/Technologies: Docker, Flask/FastAPI, TensorFlow Serving, AWS Lambda, Google Cloud AI, MLFlow

- Skills:

- Deploying machine learning models as APIs for real-time predictions

- Using cloud platforms like AWS, GCP, or Azure for model deployment

- Automating the deployment pipeline using CI/CD tools

- Monitoring and Scaling:

- Skills:

- Monitoring models in production (tracking performance and drift)

- Handling model updates and versioning

- Scaling models for high-traffic environments

Understand Deep Learning and Advanced AI

For the “full-stack” part, you should also have knowledge of deep learning, especially if you want to work with complex data like images, text, or speech.

- Deep Learning:

- Tools/Technologies: TensorFlow, PyTorch, Keras, OpenCV, Hugging Face Transformers

- Skills:

- Neural networks, CNNs, RNNs, LSTMs, GANs

- Transfer learning and pre-trained models

- Natural Language Processing (NLP) for text-based models

- Computer Vision for image-based models

Cloud & Big Data Ecosystems

Understanding how to work with cloud infrastructure and big data tools is critical for scaling up projects.

- Cloud Platforms:

- Tools/Technologies: AWS (S3, EC2, SageMaker), GCP (BigQuery, Vertex AI), Azure (ML Studio)

- Skills:

- Deploying and managing applications in the cloud

- Using cloud-based services for machine learning

- Data storage and computation in the cloud

- Distributed Computing:

- Skills:

- Understanding distributed systems (e.g., MapReduce, Spark)

- Optimizing performance for large-scale data processing

Build Portfolio and Real-world Experience

- Personal Projects:

- Build end-to-end projects to demonstrate your full-stack capabilities, such as:

- A recommendation system deployed in the cloud

- A predictive model with a web interface and real-time data ingestion

- An NLP-based chatbot or text classifier deployed via API

- Contribute to Open Source:

- Contributing to open-source machine learning, data science, or data engineering projects will deepen your understanding and expand your network.

- Collaborate with Cross-functional Teams:

- Work with data engineers, software developers, and product teams to understand how your work fits into the larger business strategy.

Keep Learning and Stay Updated

Data science is constantly advancing, making ongoing learning crucial for staying up-to-date. Follow industry blogs, attend conferences, and take online courses (e.g., Coursera, edX, Fast.ai).

Recommended Learning Resources:

- Books:

- “Python Data Science Handbook” by Jake VanderPlas

- “Deep Learning with Python” by François Chollet

- “Designing Data-Intensive Applications” by Martin Kleppmann

- “Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow” by Aurélien Géron

By following this roadmap, you’ll acquire the skills needed to work as a Full Stack Data Scientist, capable of handling the entire pipeline from data ingestion to model deployment and maintenance.